The impending adoption of the EU AI Act is the beginning of a transformative era in AI governance. It emphasizes a risk-based approach to regulate AI systems' deployment and use across the European Union but will have worldwide implications.

This act carries significance as it's set to impact how businesses navigate the world of artificial intelligence, and complying won’t be a walk in the park. Just as a team studies the rulebook before a match, businesses must familiarize themselves with the AI Act to ensure they're playing by the regulations and avoiding any penalties once the AI Act’s grace period ends (set for the end of 2025 or beginning of 2026).

So, let’s start at the beginning. What is the EU AI Act, what is its scope, and how can organizations prepare for it?

What is the AI Act?

Similar to the General Data Protection Regulation (GDPR), which brought comprehensive data protection laws to the EU, the AI Act aims to establish guidelines for AI technologies, addressing the potential risks associated with artificial intelligence. Notably, this regulation employs a risk-based approach, categorizing AI systems based on the level of risk they pose.

It's important to note that the AI Act is not about shielding the world from imagined conspiracy theories or far-fetched scenarios. Instead, it focuses on practical risk assessments and regulations that address threats to individuals' well-being and fundamental rights.

The legislative journey of this landmark regulation began with its proposal in April 2021. Since then, it has undergone rigorous discussions, negotiations, and refinements within the EU's legislative bodies. The EU Parliament gave it the green light in June 2023, and it’s set to be finalized in the beginning of 2024.

Once adopted, the AI Act will initiate a transition period of at least 18 months before becoming fully enforced. During this grace period, companies and organizations will hustle to align their AI systems and practices with the requirements outlined in the AI Act.

The Act will not only impact entities within the EU but also global vendors selling or making their systems available to EU users. Again, like the GDPR, it is a regulation created by and for the EU, but it will impact organizations worldwide.

Purpose of the AI Act

While it may sound like the EU is getting ready for a battle against robots taking over the world, the real purpose of the AI Act is more prosaic: safeguarding the health, safety, and fundamental rights of individuals interacting with AI systems. It strives to maintain a balanced and fair environment for the deployment and utilization of AI technologies.

Imagine it as a guiding principle book, ensuring AI systems play by the rules, adhere to ethical guidelines, and prioritize the well-being of users and society. It serves as a proactive measure to prevent AI from becoming a Wild West scenario, where anything goes, and instead, fosters an ecosystem where AI operates responsibly, ethically, and in the best interest of all involved parties.

So, who exactly does the AI Act concern?

The scope of the AI Act

Any AI system that is either being sold or offered in the EU market, put into service, or utilized within the borders of the EU falls under the AI Act’s purview. Whether it's a local developer selling their innovative AI software or a global tech giant making their AI services available to European users, this act ensures that all AI endeavors play by the same ethical and safety standards.

It's not just about where the AI developers are based or where the AI tools are in operation; it goes beyond geographical boundaries. It takes into consideration scenarios where the output produced by AI systems is utilized within the EU. This means that even if an AI system is operated or hosted outside the EU if its results are used within the Union, the AI Act will apply.

Understanding the AI Act’s risk categorization

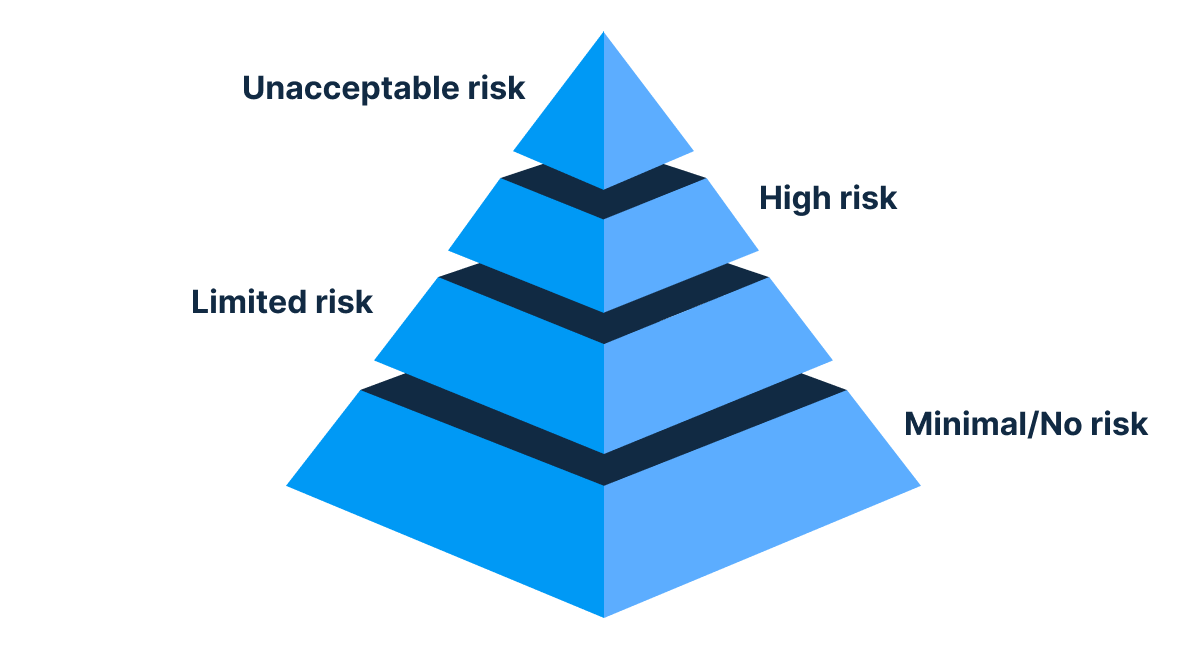

We’ve talked about what it does and who it concerns, now let’s talk about the “how”. The AI Act introduces a systematic risk categorization mechanism, categorizing AI systems into four distinct risk categories, each with specific implications and regulatory focus:

1. Unacceptable risk: AI systems falling under this category are outright prohibited. These systems present a significant potential for harm, such as manipulating behaviour through subconscious messaging or exploiting vulnerabilities based on age, disability, or socioeconomic status. Additionally, AI systems involved in social scoring (evaluating individuals based on their social behaviour), are strictly banned.

2. High risk: Systems categorized as high risk are subject to the most stringent regulations. This category includes AI systems used in safety-critical applications (such as medical devices or biometric identification) and specific sensitive sectors like law enforcement, education, and access to essential services. The regulatory focus emphasizes ensuring conformity with predefined standards, transparency, human oversight, and documentation practices to mitigate potential risks.

3. Limited risk: AI systems with limited potential for manipulation fall under this category. Although not as strictly regulated as high-risk systems, they are required to adhere to transparency obligations. Examples of such systems include AI systems where interaction with users needs to be disclosed (like chatbots), ensuring clarity and understanding of AI involvement in the process.

4. Minimal/No risk: This category encompasses AI systems posing minimal or no risk to individuals (for example some video games and spam filters). These systems require fewer regulations compared to the other categories. For these systems, the AI Act does not mandate extensive oversight or strict compliance measures due to their negligible impact on health, safety, or fundamental rights.

Understanding the categorization is fundamental for organisations to evaluate their AI systems accurately. It ensures alignment with the act's stipulations, allowing businesses to adopt the necessary measures and compliance strategies pertinent to the specific risk category of their AI systems.

Specific requirements for high-risk AI systems

High-risk AI systems face the most obligations under the AI Act, requiring compliance with a comprehensive set of standards to ensure the safety, accuracy, and protection of fundamental rights or EU citizens. The specific requirements for these systems include:

Risk management, data governance, and documentation practices

Developers of high-risk AI systems must establish robust risk management protocols. This involves a systematic assessment of potential risks associated with the AI system's deployment, implementation of data governance practices ensuring data quality, and maintaining detailed documentation outlining the AI system's development, function, and operation.

Transparency, human oversight, and accuracy standards

High-risk AI systems must provide clear information, especially when interacting with users, allowing them to comprehend and acknowledge that they are engaging with an AI system. Additionally, human oversight is necessary to monitor and intervene where required. Accuracy standards also play an important role, ensuring the reliability and correctness of the AI system's outputs.

Registration in an EU-wide public database

AI systems in the high-risk category are also required to be registered in an EU-wide public database, allowing for better oversight, traceability, and accountability within the EU's regulatory framework.

Unique cybersecurity standards

Developers handling high-risk AI systems must implement cybersecurity measures to counter unique vulnerabilities. This includes defending against 'data poisoning' attempts that corrupt the training data, guarding against 'adversarial examples' aimed at tricking the AI, and addressing inherent model flaws.

Compliance strategies and preparation for organizations

As the AI Act moves closer to adoption, organizations must proactively assess their AI systems to ensure compliance with the Act's requirements.

The AI Act's provisions may pose challenges for businesses, particularly in sectors heavily reliant on AI technologies. Compliance demands significant adjustments to existing AI governance, risk management practices, and documentation procedures.

While we wait for the final version of the AI Act to be negotiated and published, here is what organizations can expect to undergo in the very near future:

Self-Assessment: Organisations should undertake a thorough evaluation of their AI systems against the AI Act's categories of risk. Understanding where each system falls within these risk categories is pivotal. This self-assessment will determine the level of compliance needed and the corresponding obligations to meet under the AI Act.

Understanding and categorizing AI systems: Categorizing AI systems correctly ensures alignment with the specific requirements and obligations detailed in the AI Act.

Grace period preparation: With the 18-month grace period set to provide a transitional phase, organizations should utilize this time effectively. Prioritize internal audits and assessments of AI systems to identify potential gaps between existing practices and the AI Act's requirements. Develop and initiate strategies to bridge gaps to ensure a smooth transition into full compliance.

Oversight structures and collaboration: Establish structures within your organization dedicated to overseeing AI governance, risk management, and compliance.

Incident reporting: The AI Act's emphasis on incident reporting and post-market monitoring necessitates strategies to track and improve AI safety. Organisations must gear up to manage and report incidents effectively, enhancing their AI design, development, and deployment practices based on insights derived from these incidents.

How Safetica can assist you in preparing for the AI Act

Safetica specializes in cybersecurity measures and provides essential support in navigating the complexities of AI compliance. Our Data Loss Prevention (DLP) solutions offer tailored approaches, empowering companies with the necessary tools to make informed decisions regarding the integration of cutting-edge AI technologies.

Safetica's proactive blocking and risk assessment capabilities serve as a bulwark for organizations, fortifying their data security posture and ensuring responsible utilization of AI tools within the workplace. By leveraging Safetica's expertise and tools, businesses can confidently harness the potential of advanced AI technologies while safeguarding sensitive information.

With Safetica's support, companies can establish a robust strategy that aligns with the stringent requirements of the EU AI Act, ensuring compliance and data protection in an evolving digital landscape.

Next articles

Understanding SOC 2: The Scope, Purpose, and How to Comply

Get started with your SOC 2 compliance efforts: what SOC 2 is, why it matters, and, most importantly, what steps you need to take if you want to get a SOC 2 report for your organization.